In

this blog post I am going to describe about how to configure a WSO2

API Manager in a distributed setup with a clustered gateway with WSO2

ELB and the WSO2 G-REG for a distributed deployment in your

production environment. Before continuing with this post, you need to

download the above mentioned products from the WSO2 website.

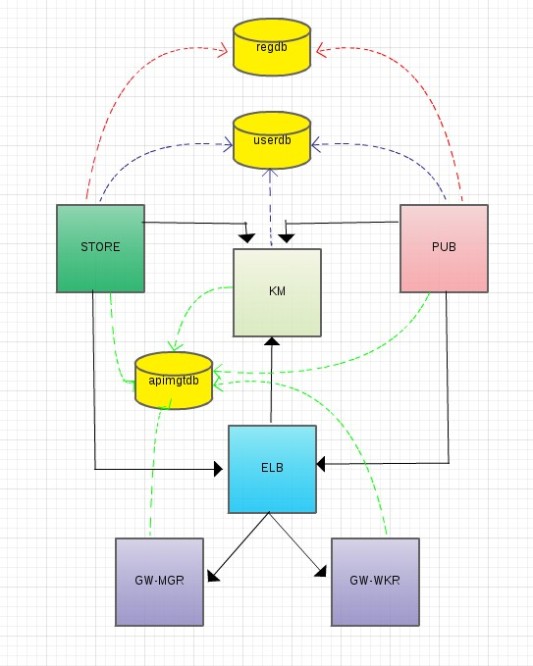

Understanding the API Manager architecture

API Manager uses the following four main

components:

Publisher

|

Creates and publishes APIs

|

Store

|

Provides a user interface to search, select,

and subscribe to APIs

|

Key Manager

|

Used for authentication, security, and

key-related operations

|

Gateway

|

Responsible for securing, protecting, managing,

and scaling API calls

|

Here is the deployment diagram that we are going to configure. In this setup, you have 5 APIM nodes with 2 gateway nodes, 1 publisher node, 1 store node, 1 key manager node and1 ELB instance.

According

to the above diagram, you need to have 6 WSO2 servers and a database

to setup this deployment. Once you have downloaded the WSO2 products,

you need extract them to 6 locations. These locations will be

referred as follows.

ELB

/ APIM_GW_MGR / APIM_GW_WKR / APIM_PUB/ APIM_STORE/ APIM_KM

Since

you are running all the servers in the same server, you need to make

sure the servers are run in different carbon offset ports.

WSO2

ELB - 0

WSO2

APIM_GW_MGR

- 1

WSO2

APIM_GW_WKR

- 2

WSO2

APIM_GW_PUB

- 3

WSO2

APIM_GW_STORE - 4

WSO2

APIM_GW_KM - 5

1.

Configuring the Databases for meta-data management and registry

mounting.

- Download and install the mysql server on your local machine.

- Create two databases for user management and shared governance and configuration registry with the following commands.

mysql

-u root -p

-

give your root password

mysql>

create database apimgtdb;mysql>

use apimgtdb;mysql>

source <APIM_GW_MGR_HOME>/dbscripts/apimgt/mysql.sql;mysql>

create database userdb;mysql>

use userdb;mysql>

source <APIM_HOME>/dbscripts/mysql.sql;mysql>

create database regdb;mysql>

use regdb;mysql>

source <APIM_HOME>/dbscripts/mysql.sql;- Download the [1]MySQL jdbc driver zipped archive and unzip and copy the MySQL JDBC driver JAR (

mysql-connector-java-x.x.xx-bin.jar) to the<CARBON_HOME>/repository/component/libdirectory for all 5 APIM nodes.

2.

Configure the data sources for the three database as follows:

Open the

<APIM_HOME>/repository/conf/datasources/master-datasources.xml

file in all 5 API Manager components.

Enable

the components to access the API Manager database by modifying the

WSO2AM_DB data source in all four master-datasources.xml files as

follows:

<datasource>

<name>WSO2AM_DB</name>

<description>The

datasource used for the API Manager database</description>

<jndiConfig>

<name>jdbc/WSO2AM_DB</name>

</jndiConfig>

<definition

type="RDBMS">

<configuration>

<url>jdbc:mysql://localhost:3306/apimgtdb?autoReconnect=true&relaxAutoCommit=true</url>

<username>user</username>

<password>password</password>

<driverClassName>com.mysql.jdbc.Driver</driverClassName>

<maxActive>50</maxActive>

<maxWait>60000</maxWait>

<testOnBorrow>true</testOnBorrow>

<validationQuery>SELECT

1</validationQuery>

<validationInterval>30000</validationInterval>

</configuration>

</definition>

</datasource>

Enable

the KeyManager, Publisher, and Store components to access the users

database by configuring the WSO2UM_DB data source in their

master-datasources.xml files as follows:

<datasource>

<name>WSO2UM_DB</name>

<description>The

datasource used by user manager</description>

<jndiConfig>

<name>jdbc/WSO2UM_DB</name>

</jndiConfig>

<definition

type="RDBMS">

<configuration>

<url>jdbc:mysql://localhost:3306/userdb?autoReconnect=true&relaxAutoCommit=true</url>

<username>user</username>

<password>password</password>

<driverClassName>com.mysql.jdbc.Driver</driverClassName>

<maxActive>50</maxActive>

<maxWait>60000</maxWait>

<testOnBorrow>true</testOnBorrow>

<validationQuery>SELECT

1</validationQuery>

<validationInterval>30000</validationInterval>

</configuration>

</definition>

</datasource>

Enable

the Publisher and Store components to access the registry database by

configuring the WSO2REG_DB data source in their

master-datasources.xmlfiles as follows:

<datasource>

<name>WSO2REG_DB</name>

<description>The

datasource used by user manager</description>

<jndiConfig>

<name>jdbc/WSO2REG_DB</name>

</jndiConfig>

<definition

type="RDBMS">

<configuration>

<url>jdbc:mysql://localhost:3306/regdb?autoReconnect=true&

relaxAutoCommit=true</url>

<username>user</username>

<password>password</password>

<driverClassName>com.mysql.jdbc.Driver</driverClassName>

<maxActive>50</maxActive>

<maxWait>60000</maxWait>

<testOnBorrow>true</testOnBorrow>

<validationQuery>SELECT

1</validationQuery>

<validationInterval>30000</validationInterval>

</configuration>

</definition>

</datasource>

To

give each of the components access to the API Manager database, open

the <APIM_HOME>/repository/conf/api-manager.xml file in each of

the 5 APIM nodes and add the following line as the first child node

of the root element (if it is not already there):

<DataSourceName>

jdbc/WSO2AM_DB </DataSourceName>

To

give the Key Manager, Publisher, and Store components access to the

users database, open the <APIM _HOME>/repository/conf/user-mgt.xml

file in each of these three components and add or modify the

dataSource property of the <UserStoreManager> element as

follows:

<UserStoreManager

class="org.wso2.carbon.user.core.jdbc.JDBCUserStoreManager">

<Property

name="TenantManager">org.wso2.carbon.user.core.tenant.JDBCTenantManager</Property>

<Property

name="dataSource">jdbc/WSO2UM_DB</Property>

<Property

name="ReadOnly">false</Property>

<Property

name="MaxUserNameListLength">100</Property>

<Property

name="IsEmailUserName">false</Property>

<Property

name="DomainCalculation">default</Property>

<Property

name="PasswordDigest">SHA-256</Property>

<Property

name="StoreSaltedPassword">true</Property>

<Property

name="ReadGroups">true</Property>

<Property

name="WriteGroups">true</Property>

<Property

name="UserNameUniqueAcrossTenants">false</Property>

<Property

name="PasswordJavaRegEx">^[\S]{5,30}$</Property>

<Property

name="PasswordJavaScriptRegEx">^[\S]{5,30}$</Property>

<Property

name="UsernameJavaRegEx">^[^~!#$;%^*+={}\\|\\\\<>,\'\"]{3,30}$</Property>

<Property

name="UsernameJavaScriptRegEx">^[\S]{3,30}$</Property>

<Property

name="RolenameJavaRegEx">^[^~!#$;%^*+={}\\|\\\\<>,\'\"]{3,30}$</Property>

<Property

name="RolenameJavaScriptRegEx">^[\S]{3,30}$</Property>

<Property

name="UserRolesCacheEnabled">true</Property>

<Property

name="MaxRoleNameListLength">100</Property>

<Property

name="MaxUserNameListLength">100</Property>

<Property

name="SharedGroupEnabled">false</Property>

<Property

name="SCIMEnabled">false</Property>

</UserStoreManager>

To

give the Publisher and Store components access to the registry

database, open the <APIM_HOME>/repository/conf/registry.xmlfile

in each of these two components and configure them as follows:

In

the Publisher component's registry.xml file, add or modify the

dataSource attribute of the <dbConfig name="govregistry">element

as follows:

<dbConfig

name="govregistry">

<dataSource>jdbc/WSO2REG_DB</dataSource>

</dbConfig>

<remoteInstance

url="https://publisher.apim-wso2.com">

<id>gov</id>

<dbConfig>govregistry</dbConfig>

<readOnly>false</readOnly>

<enableCache>true</enableCache>

<registryRoot>/</registryRoot>

</remoteInstance>

<mount

path="/_system/governance" overwrite="true">

<instanceId>gov</instanceId>

<targetPath>/_system/governance</targetPath>

</mount>

In

the Store component's registry.xml file, add or modify the dataSource

attribute of the <dbConfig name="govregistry">

element as follows (note that this configuration is nearly identical

to the previous step with the exception of the remoteInstance URL):

<dbConfig

name="govregistry">

<dataSource>jdbc/WSO2REG_DB</dataSource>

</dbConfig>

<remoteInstance

url="https://store.apim-wso2.com">

<id>gov</id>

<dbConfig>govregistry</dbConfig>

<readOnly>false</readOnly>

<enableCache>true</enableCache>

<registryRoot>/</registryRoot>

</remoteInstance>

<mount

path="/_system/governance" overwrite="true">

<instanceId>gov</instanceId>

<targetPath>/_system/governance</targetPath>

</mount>

Modify

the /etc/hosts entries to map the relevant IP addresses to the

remoteInstance URLs:

127.0.0.1

publisher.apim-wso2.com

127.0.0.1 store.apim-wso2.com

Configuring the connections among the components

You will now

configure the inter-component relationships illustrated in the

following diagram by modifying their

<APIM_HOME>/repository/conf/api-manager.xml

files. Because the Key Manager component does not depend on any other

components, you do not modify this file for the Key Manager.

Open

the <APIM_HOME>/repository/conf/api-manager.xml files in the

Gateway, Publisher, and Store components.

Gateway

Manager and Worker nodes: configure the connection to the Key Manager

component as follows:

<APIKeyManager>

<ServerURL>https://keymanager.apim-wso2.com:9448/services/</ServerURL>

<Username>admin</Username>

<Password>admin</Password>

...

</APIKeyManager>

Publisher

and Store: configure connections to the Key Manager and Gateway as

follows:

<APIKeyManager>

<ServerURL>https://keymanager.apim-wso2.com:9448/services/</ServerURL>

<Username>admin</Username>

<Password>admin</Password>

...

</APIKeyManager>

<AuthManager>

<ServerURL>https://keymanager.apim-wso2.com:9448/services/</ServerURL>

<Username>admin</Username>

<Password>admin</Password>

</AuthManager>

<APIGateway>

<Environments>

<Environment

type="hybrid">

<ServerURL>https://mgt.gateway.apim-wso2.com/services/</ServerURL>

<Username>admin</Username>

<Password>admin</Password>

<GatewayEndpoint>http://gateway.apim-wso2.com,https://gateway.apim-wso2.com</GatewayEndpoint>

</Environment>

</Environments>

</APIGateway>

Here

the Gateway endpoints are pointed to the ELB endpoints defined in the

loadbalancer.conf file. We will define these endpoints in later

section.

Also

disable the thrift server configuration in the api-manager.xml file

from STORE, PUB and Gateway worker and manager nodes as below.

<KeyValidatorClientType>ThriftClient</KeyValidatorClientType>

<ThriftClientPort>10397</ThriftClientPort>

<ThriftClientConnectionTimeOut>10000</ThriftClientConnectionTimeOut>

<ThriftServerPort>10397</ThriftServerPort>

<!--ThriftServerHost>localhost</ThriftServerHost-->

<EnableThriftServer>false</EnableThriftServer>

Now

add the following configuration to the carbon.xml file of publisher,

store and key manager nodes.

Publisher

node

–--------------------------------

<HostName>publisher.apim-wso2.com</HostName>

<MgtHostName>mgt.publisher.apim-wso2.com</MgtHostName>

Store

node

–--------------------------------

<HostName>store.apim-wso2.com</HostName>

<MgtHostName>mgt.store.apim-wso2.com</MgtHostName>

Key

Manager node

–--------------------------------

<HostName>keymanager.apim-wso2.com</HostName>

<MgtHostName>mgt.keymanager.apim-wso2.com</MgtHostName>

Modify

the /etc/hosts entries to map the relevant IP addresses to the

ServerURLs you just configured:

127.0.0.1

keymanager.apim-wso2.com

127.0.0.1

mgt.gateway.apim-wso2.com

127.0.0.1 gateway.apim-wso2.com

127.0.0.1 mgt.publisher.apim-wso2.com

127.0.0.1 mgt.keymanager.apim-wso2.com

127.0.0.1 mgt.store.apim-wso2.com

Now

we have successfully created the distributed setup of the API

Manager. Now we need to setup the gateway cluster with the ELB.

Configuring

the ELB

- Open the <ELB_HOME>/repository/conf/loadbalancer.conf file.

- Add the following configuration:

gateway

{

domains{

wso2.gw.domain {

tenant_range *;

group_mgt_port 5000;

domains{

wso2.gw.domain {

tenant_range *;

group_mgt_port 5000;

mgt

{

hosts

mgt.gateway.apim-wso2.com;

}

worker {

hosts gateway.apim-wso2.com;

}

}

}

}

worker {

hosts gateway.apim-wso2.com;

}

}

}

}

Previously,

we configured several properties of the cluster such as domain name

and sub-domain, but we didn’t define them there. We now define

these properties as we build the cluster.

- Open the

<ELB_HOME>/repository/conf/axis2/axis2.xmlfile. - Locate the Clustering section and verify or configure the properties as follows (some of these properties are already set correctly by default):

- Enable clustering for this node:

<clustering class="org.wso2.carbon.core.clustering.hazelcast.HazelcastClusteringAgent" enable="true"> - Set the membership scheme to

wkato enable the Well Known Address registration method (this node will send cluster initiation messages to WKA members that we will define later):

<parameter name="membershipScheme">wka</parameter> - Specify a domain name for the ELB node (note that this domain it for potentially creating a cluster of ELB nodes and is not the cluster of APIM nodes that the ELB will load balance):

<parameter name="domain">wso2.carbon.lb.domain</parameter> - Specify the port used to communicate with this ELB node:

<parameter name="localMemberPort">4000</parameter>carbon.xml. If this port number is already assigned to another server, the clustering framework will automatically increment this port number. However, if two servers are running on the same machine, you must ensure that a unique port is set for each server. - <!-- The host name or IP address of this member -->

<parameter

name="localMemberHost">elb.wso2.com</parameter>

We have now

completed the clustering-related configuration for the ELB. In the

next section, we will make one last change to the ELB that will

increase usability.

Configuring the ELB to listen on default ports

We will now

change the ELB configuration to listen to the default HTTP and HTTPS

ports.

- Open the

<ELB_HOME>/repository/conf/axis2/axis2.xmlfile. - Locate the Transport Receiver section and configure the properties as follows:

- In the

<transportReceiver name="http" class="org.apache.synapse.transport.passthru.PassThroughHttpListener">transport, enable service requests to be sent to the ELB's default HTTP port instead of having to specify port 8280:<parameter name="port">80</parameter> - In the

<transportReceiver name="https" class="org.apache.synapse.transport.passthru.PassThroughHttpSSLListener">transport, enable service requests to be sent to the ELB's default HTTPS port instead of having to specify port 8243:

<parameter name="port">443</parameter>

In the next

section, we will map the host names we specified to real IPs.

Mapping the host name to the IP

In the ELB,

we configured a host name in

loadbalancer.conf

to front the worker service requests.

127.0.0.1

elb.wso2.com

Starting the ELB server

Start the

ELB server by typing the following command in the terminal:

sudo -E sh <ELB_HOME>/bin/wso2server.sh

Configuring the Databases for meta-data management and registry

mounting.

- Download and install the mysql server on your local machine.

- Create two databases for user management and shared governance and configuration registry with the following commands.

mysql

-u root -p

-

give your root password

drop

database wso2apimum_db;

drop

database wso2apimreg_db;

create

database wso2apimum_db;

use

wso2apimum_db;

source

APIM_GW_MGR_HOME/dbscripts/mysql.sql;

create

database wso2apimreg_db;

use

wso2apimreg_db;

source

APIM_GW_MGR_HOME/dbscripts/mysql.sql;

Mounting the databases to the registry

Configure

<APIM_HOME>/repository/conf/datasource/master-datasources.xml

as below in both worker and manager nodes.

<datasource>

<name>WSO2SharedDB</name>

<description>The

datasource used for registry</description>

<jndiConfig>

<name>jdbc/WSO2SharedDB</name>

</jndiConfig>

<definition

type="RDBMS">

<configuration>

<url>jdbc:mysql://localhost:3306/wso2apimreg_db</url>

<username>root</username>

<password>root</password>

<driverClassName>com.mysql.jdbc.Driver</driverClassName>

<maxActive>50</maxActive>

<maxWait>60000</maxWait>

<testOnBorrow>true</testOnBorrow>

<validationQuery>SELECT

1</validationQuery>

<validationInterval>30000</validationInterval>

</configuration>

</definition>

</datasource>

Change

the username and password values of the relevant elements

accordingly.

Navigate

to the <APIM_ HOME>/repository/conf/registry.xml file and

specify the following configurations for both worker and manager

instances of the gateway.

<dbConfig

name="sharedregistry">

<dataSource>jdbc/WSO2SharedDB</dataSource>

</dbConfig>

<remoteInstance

url="https://gateway.apim-wso2.com/registry">

<id>instanceid</id>

<dbConfig>sharedregistry</dbConfig>

<readOnly>false</readOnly>

<enableCache>true</enableCache>

<registryRoot>/</registryRoot>

</remoteInstance>

<mount

path="/_system/config" overwrite="true">

<instanceId>instanceid</instanceId>

<targetPath>/_system/apimnodes</targetPath>

</mount>

<mount

path="/_system/governance" overwrite="true">

<instanceId>instanceid</instanceId>

<targetPath>/_system/governance</targetPath>

</mount>

Configure the user management database to point to the mysql

database.

To

configure the user management database edit

<APIM_HOME>/repository/conf/datasoruces/master-datasources.xml

as shown below in both gateway worker and manager nodes.

<datasource>

<name>WSO2_UM_DB</name>

<description>The

datasource used for registry and user manager</description>

<jndiConfig>

<name>jdbc/WSO2UmDB</name>

</jndiConfig>

<definition

type="RDBMS">

<configuration>

<url>jdbc:mysql://localhost:3306/wso2apimum_db</url>

<username>root</username>

<password>root</password>

<driverClassName>com.mysql.jdbc.Driver</driverClassName>

<maxActive>50</maxActive>

<maxWait>60000</maxWait>

<testOnBorrow>true</testOnBorrow>

<validationQuery>SELECT

1</validationQuery>

<validationInterval>30000</validationInterval>

</configuration>

</definition>

</datasource>

Make

sure to replace username and password with your MySQL database

username and password.

To

configure the datasource, update the dataSource property found in

<APIM_HOME>/repository/conf/user-mgt.xml as shown below in both

gateway nodes.

<Property

name="dataSource">jdbc/WSO2UmDB</Property>

Configuring the Manager node

Configuring

clustering for the manager node is similar to the way you configured

it for the ELB node, but the localMemberPort is 4100 instead of 4000,

and you define the ELB node instead of the gateway

manager node as the well-known member.

- Open the <APIM_GW_MGR_HOME>/repository/conf/axis2/axis2.xml file.

Locate

the Clustering section and verify or configure the properties as

follows (some of these properties are already set correctly by

default):

Enable

clustering for this node:

<clustering

class="org.wso2.carbon.core.clustering.hazelcast.HazelcastClusteringAgent"

enable="true">

Set

the membership scheme to wka to enable the Well Known Address

registration method (this node will send cluster initiation messages

to WKA members that we will define later):

<parameter

name="membershipScheme">wka</parameter>

Specify

the name of the cluster this node will join:

<parameter

name="domain">wso2.gw.domain</parameter>

Specify

the port used to communicate cluster messages:

<parameter

name="localMemberPort">4100</parameter>

Note:

This port number will not be affected by the port offset in

carbon.xml. If this port number is already assigned to another

server, the clustering framework will automatically increment this

port number. However, if two servers are running on the same machine,

you must ensure that a unique port is set for each server.

<parameter

name="localMemberHost">mgt.apim.wso2.com</parameter>

<parameter

name="properties">

<property

name="backendServerURL"

value="https://${hostName}:${httpsPort}/services/"/>

<property

name="mgtConsoleURL"

value="https://${hostName}:${httpsPort}/"/>

<!-- Manger Setup with Port Mapping-->

<property name="port.mapping.80" value="9764"/>

<property

name="port.mapping.443" value="9444"/>

<property

name="subDomain" value="mgt"/>

<!-- Worker Setup-->

<!--property name="subDomain" value="worker"/-->

</parameter>

<!--

The

list of static or well-known members. These entries will only be

valid if the

"membershipScheme"

above is set to "wka"

-->

<members>

<member>

<hostName>elb.wso2.com</hostName>

<port>5000</port>

</member>

</members>

Because

we are running five Carbon-based products on the same server, we must

change the port offset to avoid port conflicts. Additionally, we will

add the cluster host name so that any requests sent to the manager

host are redirected to the cluster, where the ELB will pick them up

and manage them.

Open

<APIM_GW_MGR_HOME>/repository/conf/carbon.xml.

Locate

the <Ports> tag and change the value of its sub-tag to:

<Offset>1</Offset>

Also

change the management host name as below.

<MgtHostName>mgt.apim.wso2.com</MgtHostName>

Enabling

DepSync on the manager node

You

configure DepSync in the carbon.xml file by making the following

changes in the <DeploymentSynchronizer> tag:

<DeploymentSynchronizer>

<Enabled>true</Enabled>

<AutoCommit>true</AutoCommit>

<AutoCheckout>true</AutoCheckout>

<RepositoryType>svn</RepositoryType>

<SvnUrl>https://svn.example.com/depsync.repo/</SvnUrl>

<SvnUser>repouser</SvnUser>

<SvnPassword>repopassword</SvnPassword>

<SvnUrlAppendTenantId>true</SvnUrlAppendTenantId>

</DeploymentSynchronizer>

Here

you need to provide a valid url for the svn location and username and

password. You can follow this link to create a local svn repo.

You

need to copy the [1]svnClientBundle-1.0.0.jar file and

[2]trilead-ssh2-1.0.0-build215.jar file in to

repository/components/lib folder after configuring the depsync.

Start

the APIM server by typing the following command in the terminal:

sh

<APIM_GW_MGR_HOME>/bin/wso2server.sh -Dsetup

Configuring the Worker Node

Configuring

clustering for the worker nodes is similar to the way you configured

it for the manager node, but the localMemberPort will vary for each

worker node, you add the subDomain property, and you add the ELB and

Gateway manager node to the well-known members, as described in the

following steps.

- Open the <APIM_GW_WKR_HOME>/repository/conf/axis2/axis2.xml file.

Locate

the Clustering section and verify or configure the properties as

follows (some of these properties are already set correctly by

default):

- Enable clustering for this node:

<clustering

class="org.wso2.carbon.core.clustering.hazelcast.HazelcastClusteringAgent"

enable="true">

- Set the membership scheme to wka to enable the Well Known Address registration method (this node will send cluster initiation messages to WKA members that we will define later):

<parameter

name="membershipScheme">wka</parameter>

- Specify the name of the cluster this node will join:

<parameter

name="domain">wso2.gw.domain</parameter>

- Specify the port used to communicate cluster messages

<parameter

name="localMemberPort">4101</parameter>

- Define the sub-domain as worker by adding the following property under the <parameter name="properties"> element:

<property

name="subDomain" value="worker"/>

Define

the ELB and manager nodes as well-known members of the cluster by

providing their host name and localMemberPort values. The manager

node is defined here because it is required for the Deployment

Synchronizer to function.

<members>

<member>

<hostName>elb.wso2.com</hostName>

<port>5000</port>

</member>

<member>

<hostName>mgt.apim.wso2.com</hostName>

<port>4100</port>

</member>

</members>

Because

we are running five Carbon-based products on the same server, we must

change the port offset to avoid port conflicts.

Open

<APIM_GW_WKR_HOME>/repository/conf/carbon.xml.

Locate

the <Ports> tag and change the value of its sub-tag to:

<Offset>2</Offset>

You

configure DepSync in the carbon.xml file by making the following

changes in the <DeploymentSynchronizer> tag:

<DeploymentSynchronizer>

<Enabled>true</Enabled>

<AutoCommit>false</AutoCommit>

<AutoCheckout>true</AutoCheckout>

<RepositoryType>svn</RepositoryType>

<SvnUrl>https://svn.example.com/depsync.repo/</SvnUrl>

<SvnUser>repouser</SvnUser>

<SvnPassword>repopassword</SvnPassword>

<SvnUrlAppendTenantId>true</SvnUrlAppendTenantId>

</DeploymentSynchronizer>

Here

you need to provide a valid url for the svn location and username and

password. You can follow this link to create a local svn repo.

You

need to copy the [1]svnClientBundle-1.0.0.jar file and

[2]trilead-ssh2-1.0.0-build215.jar file in to

repository/components/lib folder after configuring the depsync.

Start

the APIM server by typing the following command in the terminal:

sh

<APIM_GW_WKR_HOME>/bin/wso2server.sh

Now

the setup is completed and you can access the publisher and store

nodes from following urls.

Awesome post presented by you..your writing style is fabulous and keep update with your blogs AWS Online Course

ReplyDeleteThanks for sharing. This communication can be easily developed by harnessing SMS services of cellular technology. By employing Bulk SMS API into service, the senders can easily send their messages to the target audience within a few couples of seconds.

ReplyDelete