Just before all the hype about Microservices Architecture is gone, there is another term which is looming within the technology forums. Even though this is not entirely new concept, it became a talking topic recently. This hot topic is Serverless Architecture and Serverless computing. As I have already mentioned, this was around us for some time with the advent of Backend As A Service or BaaS or MBaaS. But it was at a different scale before AWS Lambda, Azure Functions and Google Cloud Functions came into the picture with their own serverless solutions.

In layman’s terms, Serverless Architecture or Serveress Computing means that your backend logic will run on some third party vendor’s server infrastructure which you don’t need to worry about. It does not mean that there is no server to run your backend logic, rather you do not need to maintain it. That is the business of these third party vendors like AWS, Azure and Google. Serverless computing has 2 variants.

- Backend as a Service (BaaS or MBaaS — M for Mobile)

- Function as a Service (FaaS)

With BaaS or MBaaS, backend logic will run on a third party vendor. Application developer do not need to provision or maintain the servers or the infrastructure which runs this backend services. In most cases, these back end services will run continuously once they are started. Instead, application developers need to pay a subscription to the hosting vendor. In most cases, this subscription is weekly, monthly or yearly basis. Another important aspect of BaaS is that it will run on shared infrastructure and the same backend service will be used by multiple different applications.

The second variant is the more popular one these days which is Function as a Service or FaaS. Most of the popular technologies like AWS Lambda, Microsoft Azure Functions as well as Google Cloud Functions fall into this category. With FaaS platforms, application developers (users) can implement their own back end logic and run them within the serverless framework. Running of this functionality in a server will be handled by the serverless framework. All the scalability, reliability and security aspects will be taken over by this framework. Different vendors provide different options to implement these functions with popular programming languages like Java and C#.

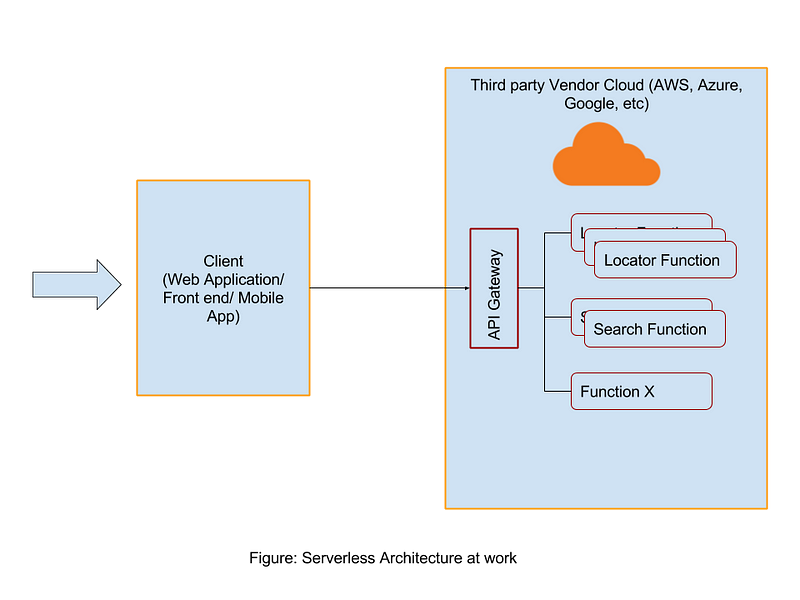

Once these functions are implemented and deployed on the FaaS framework, the services offered by these functions can be triggered via events from vendor specific utilities or via HTTP requests. If we consider the most popular FaaS framework which is AWS Lambda, it allows users to trigger these functions through HTTP requests by interfacing the lambda functions with AWS API Gateway. There are few main differences of FaaS when compared to BaaS.

- FaaS function will run for a short period of time (at max 5 mins for Lambda function)

- Cost would be only for the amount of resources used (per minute level charging)

- Ideal for hugely fluctuating traffic as well as typical user traffic

All the above mentioned points proves that Serverless Computing or Serverless Architecture is worth giving a shot at. It will simplify the maintenance of back end systems while giving cost benefits for handling all sorts of different user behaviors. But according to Newtons 3rd law, there is a reaction for every action, meaning there are some things we need to be careful when dealing with serverless kind of architectures.

- Lack of monitoring, debugging capabilities to the user about the production system and it’s behaviors. User had to trust whatever the monitoring options provided by the vendor

- Vendor locking can cause problems like frequent mandatory API changes, pricing structure changes and technology changes

- Latencies occur at initial requests can become challenging for providing better SLAs across multiple concurrent users

- Since server instances are come and go, maintaining the state of an application is really challenging with these types of frameworks

- Not suitable for running long running business processes since these function (Services) instances will destroy after a fixed time duration

- There are some other limitations like maximum TPS which can be handled by a given function within a given user account (AWS) is fixed by the vendor.

- End to end testing or Integration testing is not easy with the functions come and go.

Having said all these limitations, these things will change in the future with more and more vendors coming along with more improved versions of their platforms.

Another important thing is the difference between a Platform as a Service and a FaaS (or BaaS or MBaas). With PaaS platforms, users can implement their business logic with polyglot of programming languages as well as other well known technologies. There will always be servers running in the backend specifically for the user applications and will run continuously. Due to this behavior of the PaaS frameworks, pricing is based on large chunks such as weekly, monthly or yearly. Another important aspect is the automatic scalability of the resources is not that easy with these platforms. If you have these requirements, considering a Serverless Computing platform (FaaS) would be a better choice.

Finally, with the type of popularity achieved by the Microservices Architecture, Serverless Architecture is well suited for adopting a MSA without the hassle of maintaining the servers, scalability and availability headaches. Even though Serverless computing has the capabiltiies to extend the popularity of MSA, it has some limitations when it comes to practical implementations due to the nature of vendor specific concepts. Hopefully these things will eventually go away with concepts like serverless framework.

It is nice blog Thank you provide important information and I am searching for the same information to save my time

ReplyDeleteAzure Online Training Bangalore

ReplyDeleteI like your post very much. It is very much useful for my research. I hope you to share more info about this. Keep posting mulesoft training

The article is very informative. Thanks you so much for sharing

ReplyDeleteGoogle Cloud Platform Training

GCP Online Training

Google Cloud Platform Training In Hyderabad

Thank you for posting such a great blog. I found your website perfect for my needs. Read About Sell Apple Developer Enterprise Account

ReplyDeleteVery nice post with lots of information. Thanks for sharing this

ReplyDeleteMulesoft Training

Mulesoft Self Learning

cloud baas providers

ReplyDeleteWith SunTec Ecosystem Management, co-innovate and create solutions which solve specific customer lifecycle needs.

Nice and good article. It is very useful for me to learn and understand easily. Thanks for sharing

ReplyDeleteMule 4 Training

Best Mulesoft Online Training